Copyright © 2009 Gabriel M. Beddingfield

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is available here and here and is entitled "GNU Free Documentation License".

Please see also the acknowledgements.

| Revision History | ||

|---|---|---|

| Revision 8 | 2009-Nov-16 | gabriel |

| Replace PNG's with JPG's to handle web traffic better. | ||

| Revision 7 | 2009-Nov-11 | gabriel |

| Finish out the SVG/PNG concepts for the screens. Tweak the sampler spec. Add flow chart. | ||

| Revision 6 | 2009-Nov-10 | gabriel |

| Lower minimum specs. Update images for matrix view and sequence editor. | ||

| Revision 5 | 2009-Nov-09 | gabriel |

| Add license and other legal matters. Add several definitions. | ||

| Revision 4 | 2009-Nov-09 | gabriel |

| Added ideas regarding jazz comping. Added an acknowledgements section. | ||

| Revision 3 | 2009-Nov-06 | gabriel |

| Continued to clarify UI. Add new image for GUI concepts (based on SVG original). | ||

| Revision 2 | 2009-Nov-05 | gabriel |

| Flesh out the Matrix View, Sample Editor, and Instrument Rack sections. Clean up some concepts. | ||

| Revision 1 | 2009-Nov-04 | gabriel |

| Fix myriad spelling errors. Thanks Nathan! | ||

| Revision 0 | 2009-Nov-04 | gabriel |

| Initial draft of Composite specification | ||

Abstract

Composite is a software application/system for real-time, in-performance sequencing, sampling, and looping. It has a strong emphasis on the needs of live performance improvisation. It is built around the Tritium audio engine, which includes LV2 plugins and developer libraries.

This specification defines how Composite will look and operate from the user's perspective (which includes the Tritium plugins and public libraries). Therefore it defines names, concepts, and interfaces. It will include diagrams and mock-ups of how the software is intended to look (but the actual look and feel will evolve over time). This document is a work in progress, and will be continuously revised as things change or develop.

This document does not discuss algorithms or implementation — all of which will be discussed elsewhere. This document covers external interfaces.

Table of Contents

This document covers Composite version 1.0, which includes the Tritium audio engine. Composite currently does not exist in any form.

This document refers to several trademarks that are not associated with this project in any way. A listing and a standard disclaimer appears in Section 5.

Composite is music-making software tool that allows an individual musician to create, revise, and control a musical composition in real-time, even as part of a performance. It provides the musician with a simple way to organize and reuse his various resources (samples, loops, sequences, songs, etc.) quickly and arrange them without regard for their type. It also provides full automation so that it can be incorporated into complex rigs. Unlike other such software, it is built on open standards and free software, which gives the musician greater flexibility and control over how Composite is used.

The core of Composite is the Tritium audio engine, which is a library that includes a sampler, sequencer, and various utilities needed to provide the core functionality of Composite. This includes audio plugins that are built using Tritium.

Composite comprises a software application that is essentially a musical instrument. It enables the user to aggregate different audio resources and manipulate them in real time for live performance. The manipulations available are sequences (i.e. to drive a synthesizer), step sequences (programs that work relative to an input note), samples (recording, editing, playing), looping of sequences, looping of audio, and real-time tempo synchronization.

Composite is built around the Tritium audio engine, which provides the core audio and sequencing functionality. It is intended that Tritium would become a spin-off library with a stable API so that other projects would be able to reuse the code. This includes a synthesizer plugin built on the Tritium sampler.

Currently, the software application that most resembles this is Ableton's Live™. However, this software has its roots in Hydrogen, an integrated sequencer and sampler that focuses on creating drum patterns. The ideas were originally discussed on the hydrogen-devel mailing list, and the code base is actually a fork of Hydrogen. However, the Hydrogen developers determined that this application is a completely new direction, and not appropriate for Hydrogen.

Composite was started by Gabriel Beddingfield <gabrbedd@gmail.com>. It will be developed in an open-source manner. As more developers volunteer, different maintenance schemes will be developed.

This document is intended to communicate what the final product, Composite, should look like. This document has the following sections:

Scope — Describes what the project is about in broad terms. Identifies the industry and people involved.

Referenced Documents — Points to other documents that are important to the development of Composite and this document.

Requirements — A detailed listing of exactly what the interfaces of the software shall be. This includes screen shots, plugin interfaces, etc.

Qualification of Completion — Defines the criteria that, once met, means we have accomplished the goal.

Terms, Abbreviations, and Definitions — Some people use words differently. Technical terms and abbreviations are clearly described here.

While this document is contained in a revision control system for fully granular history, revisions to this document shall happen like this:

No changes shall be tracked before revision 0. Revision 0 is something that is agreed upon when this happens. It shouldn't be too complete... but relatively fleshed out.

After this, revisions should be logged according to Docbook's

<revhistory>scheme.Sections and paragraphs that are touched in the revision should be marked with the

revisionattribute. It should look likerevision='5'for the first one. Later revisions shall use a comma separated list likerevision='5,6,7'.

A FAQ is provided to provide quick and concise answers about this project.

See the main page for links to all documents, source code, roadmaps, etc.

The following software applications and concepts have shaped and inspired Composite:

Live™ (Ableton)

Reason™ (Propellerhead Software)

seq24

non-sequencer

freewheeling

sooperlooper

Hydrogen

The iPhone™

Indamixx (touchscreen)

DJ-ing

InConcert

Specimen

This section shall be divided into subsections that describe how the software should operate. Note that it does not discuss how the software is implemented. Conformance to this section (as a whole) establishes the completion of the software.

Gabriel is a guitar player in a rock band. His band sounds pretty vanilla, and he wants to add synths and sound effects to the live show to add some depth. However, he can't give up his duties as guitar player to run a keyboard rig. He's tried various samplers and sequencers (controlled by a footswitch), but they force the band to use a click (which everyone hates).

While surfing the net, Gabriel finds out about Composite. The software allows him to set up different sections of his song and control them with a MIDI footswitch (start, stop, loop, fast-forward, etc.). Even better, it comes with a tap-tempo that will also sync the beat to the taps (not just the tempo). Now the band is able to use the sequences and loops without having to use a click track. Audiences love the new sounds and textures in their music.

Anthony is a DJ who has several samplers, sequencers, and vinyl records that he uses in his shows. However, programming the sequencers takes a lot of prep work. He'd like to use a laptop in his rig, but doesn't want to shell out the cash for something like Live or Reason.

Anthony was talking to Gabriel about his woes, and heard about Composite. He checked out the software, and was impressed at how easily he could layer drum loops and sequences... not to mention controlling the tempo. He was able to load his sample library into Composite, and can easily pull them out and add them anywhere he wants in a loop or sequence.

In a live show, Anthony was playing a popular dance song, and was inspired to add a different beat to it... transitioning to a couple of different moods... and then into another dance tune. While one loop was playing, he was able to quickly assemble some of his other sequences and samples into another loop. Once done, he was able to cue it up to start on the next go-round. After about 2 or 3 cycles, he was in the new song, and in a new level of street cred.

James likes to play freaky sounds at random. People talking. Stuff he downloaded at freesound.org. Stuff he took from movies. He usually uses specimen, but it's starting to show its age. The next-best option is LinuxSampler, but editing samples has to mostly be done off-line. Ditto for Hydrogen.

When he found Composite, he liked that it was a plugin that could be used in his favorite Foomatic sequencer. The plugin enabled him to quickly load and control samples from his library.

Composite intends to accomplish the following. Note that the word “clip” is used quite a bit. See the glossary for its definition.

General Goals

Composite shall be free software, as defined by the FSF. Licence shall be GPL v2 or later.

Composite shall be able to be included as a part of a hardware product. Therefore, any utilization of LinuxSampler shall be fully optional and through a run-time link (like a plugin).

Minimum requirements: 500 MHz processor, 196 MB RAM, Running X11 with a lightweight window manager. Windows and/or OS X may require faster machines.

Features and knobs must not overwhelm a new user. Advanced features need to be out of the way. If necc. advanced features will not be supported if it means cluttering the UI.

Application/GUI Goals

The UI shall be optimized for a netbook with a 7-in. screen of 800x480 pixels (120 px/in). Larger screens will be supported, but the “netbook interface” will have priority. (Note: this is the screen resolution... not the size of the Composite window!)

Enable real-time, useful editing that is beneficial to a DJ or musician in a real performance.

Provide an interface to freely mix and assemble various library components (sequences, loops, samples)

Provide an arpeggiator / step sequencer system to make MIDI "programs" that respond to input notes. This can sequence not just notes, but real-time parameter changes while the note plays. This should be available as a clip and as sort of a MIDI FX.

Sequence clips may be quickly transposed to whatever key the musician selects (or is playing in).

Debatable: Audio clips may be quickly transposed to whatever key the musician selects (or is playing in).

Debatable: Sequences that have an established chord progression can be altered quickly, easily, and briefly (one-shot) with an altered chord. This idea is especially to suit jazz comping.

While playing, everything that happens is recorded (logged) so that the musician can save a performance to evaluate later. During or at the end of the session, the user will have the option to save the current performance. Saved recordings shall be repeatable, even if the clips that comprise them were edited during or after the session.

Undo/redo for the current session.

Allow editing of clips even while it is playing/looping.

Clips may be triggered so that they play back synchronously (bar lines align), cue-synchronously (bar lines align, but don't start until the next beat 1), beat-synchronously (beats align, but not bar lines), and asynchronously (clip tempo and beats ignore the transport).

Clips may be halted immediately, cued to the next beat, or cued to the next bar.

The transport can follow the JACK Transport and can also be the JACK Transport master.

JACK Transport support shall enable various types of synchronization, such as full synchronization (B:b.t lines up) and beat-synchronization.

Able to schedule tempo and meter changes in a song.

Tap-tempo with beat-synchronization (like InConcert), which overrides the programmed tempo setting.

Remote control via OSC and MIDI.

Provide a customizable MIDI implementation, but also provide a default implementation.

Sequences can provide parameter automation in addition to (or in lieu of) notes.

Sequences can sequence through just triggers (no note OFF's), or have note lengths.

Note lengths at the end of a clip do not have to align with the length of the clip. The note can overlap the end of the clip.

Support for mute grouping.

Sequences can support randomization of timing and pitch, as well as lead/lag scheduling.

Unlimited sequencing resolution. This includes tuplets... but also non-rational divisions.

MIDI and Audio able to export to different tracks.

For any components that Tritium supplies as an LV2, use the LV2 interface for Composite, if at all possible. This way, the LV2 plugin is not an unused, untested plugin. This includes the Tritium sampler and the Tritium looper (if it is different from the sampler).

Debatable: a scripting engine to allow the user write small programs to extend functionality. Suggest Python. However, this might be a Bad Idea.

Plugin support: LV2 host (fx, synth, midi), DSSI host, LADSPA host. Note: DSSI and LADSPA support may be through a shim like Naspro.

Audio driver support: JACK only (including MIDI).

Platform Support: Linux, OS X, Windows

See “Sampler Goals” below.

See “Looper Goals” below.

The entire audio back-end is available as a developer library... but not necc. as a stable API.

Sampler Goals

Provide the sampler as a C++ library

Provide a stand-alone, plugin interface via LV2 and DSSI.

Support loading and manipulating samples on the fly.

Record samples and use/manipulate them on the fly.

Organize drum kits and their samples.

Provide tagging and logic to map GM drum kits

Able to import Hydrogen drumkits, or use them without importing.

Provide advanced sample playback, including marking points to loop (for sustain)

Possibly inappropriate: also serve as the looper interface. See next group.

Looper Goals

Support for time-stretch in the loops.

Support for beat-slicing (and a way to save a beat-sliced loop).

Overdub, re-record, undo, etc.

User Library Goals

Provide a library that allows intuitive organization and fast access to the musician's resources. This includes the user's clips, plugins, audio system resources, operating system resources, and online resources (e.g. freesound.org).

Any item from the library can be loaded in real time without disrupting any currently running audio. Clips have a priority in that they should be able to be loaded and played within 20 ms.

Import/Export system that includes ability to import/export Simple Midi Files.

Composite will not be:

A multi-track audio recorder/player. Use Ardour.

A multi-track MIDI sequencer focused on composition. Use Rosegarden, Muse, etc.

A high powered, disk-streaming sampler. Use LinuxSampler.

A harmonic synthesizer. Instead, we'll provide access to synthesizer plugins and MIDI in/out so that you can access your favorite synths.

Will not support LASH. Possibly in a future version, but not 1.0.

Will not support misc. sampler formats (GIG, SF2, etc). Possibly in a future version, but not 1.0.

Will not support non-destructive editing beyond a single session with undo/redo and the auto-recording thing.

When the user starts the program, he will be greeted with a splash screen. However, this is only required if it takes more than one (1) second to start the program.

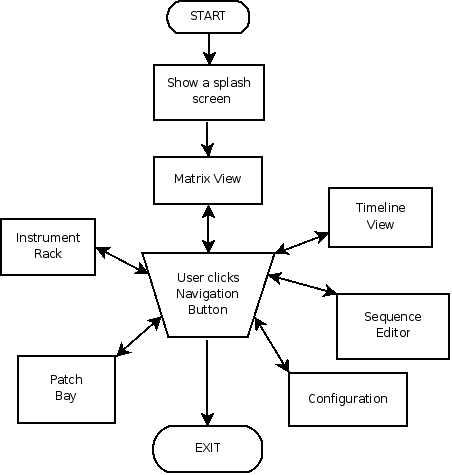

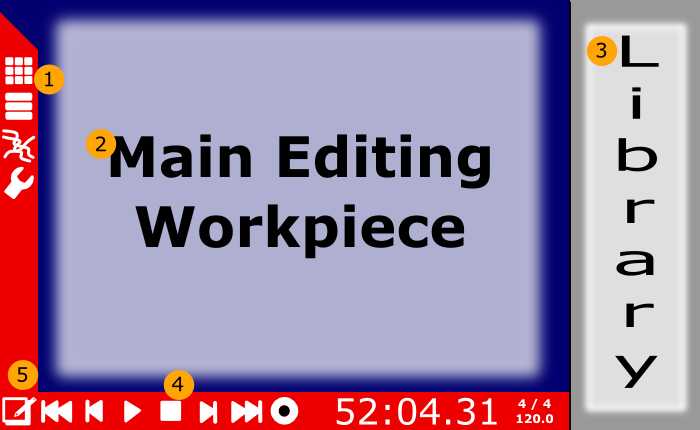

From there, the user will be placed in the Matrix View. With the navigation buttons (see Figure 2) the user will be able to quickly jump into any view that they need. This flow chart demonstrates this:

Each of the screens is detailed in the sections that follow.

This section is divided into several subsections based on the major components that comprise Composite.

The GUI will be a C++ application that is built using the Qt toolkit. It will not be a typical menu-drive or Multiple Document Interface (MDI) application.

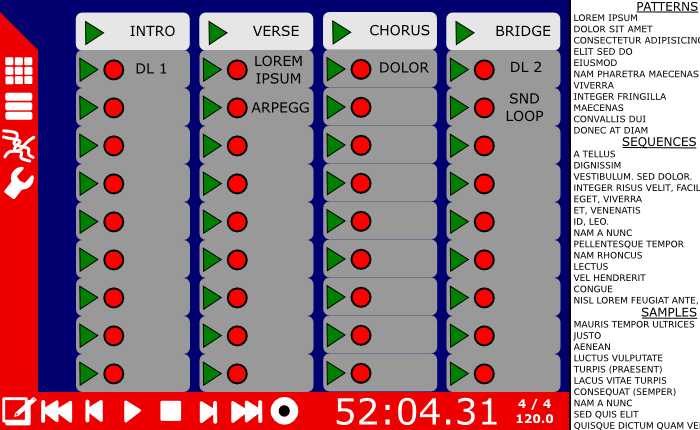

All of the screens in Composite are supposed to follow the concepts found in Figure 2. First, note that this image is full size for a 100 PPI screen (700x430 pixels). Along the left and bottom edges there are several tools that can be used to switch contexts (or modes). The left edge is for context switching. The bottom edge for things like transport controls. In the center is a central widget used for manipulating the main workpiece for the mode. On the right is a widget where resources can be accessed quickly to be added to (or saved from) the main workpiece.

Figure 2. GUI Concepts

Navigate to views: matrix view, track view, instrument rack, and application settings. | |

A container for a main editor widget, such as the matrix view, sequence editor, etc. | |

A container for resources, such as their library of samples, sequences, etc. This widget will use kinetic scrolling to access the resources faster and more intuitively. | |

Transport tools and indicators. | |

The editor icon. Drag items from wherever they are to this corner and immediately the editor will open up. |

At least for the resources widget, the intention is to make use of kinetic scrolling to make access to the resources fast and intuitive.

The objective is actually to make the UI optimized for use with a touchscreen... but also make it effective for mouse and keyboard use. While this seems exotic today (for a desktop app), it will not be exotic in 5-10 years.

A single session (with all its groups, clips, and samples) is called a "scene" (rather than a "song").

Widgets, icons, etc. will follow these rules:

There will be a global PPI setting for the application that will set sizes and whatnot. Three sizes will be supported for 1.0: 85, 100, and 120 PPI.

The minimum widget size for anything selectable (icons, rows, blocks) will be .375-in. (This translates to 32px @85, 38px @100, and 45px @120.) [1]

Widgets will be sized as a multiple of the minimum widget size. Exception: Widgets within an editor may need to be sized explicitly based on the content.

As much as possible, use zero spacing between widgets.

If the standard size rule must be broken, try to do so by half (.1875-in).

Font layout is more tricky. Absolute minimum font will be 9px tall (irrespective of screen resolution). The preferred minimum font will be .13-in tall. So, if a widget has a 9px font it will usually need a way to briefly “zoom” the font to .13-in or .18-in for examination. Relative font sizes will need to be evaluated as the UI develops.

The user will be able to calibrate his display for his pixels-per-inch (PPI) setting. Sizes of UI widgets will be affected accordingly. We will try to auto-detect, but provide an override. [2]

The Matrix View is the focal point of the Composite work-flow. It is here that clips are grouped together, and triggered as a group or individually.

The vertical columns are a group (even though the figure currently says "Loop"). The expectation is that a group will usually (but not always) be looped by itself (exclusive of any other groups). A group will typically represent something like an "intro" or a "verse" or a "chorus" in a song.

Each item in a group can be triggered, stopped, or edited individually... as if it were an audio sample.

There is no hard horizontal grouping. However, horizontal rows may be enabled or disabled as a group. Thus, if the user wishes for horizontal rows to be like a single-instrument "track," they can accomplish it like this. However, there is no need to declare it.

When you drag the clip onto the group, a box will be allocated. The box will have a trigger (probably a triangle for “Play”), a record button (a red circle), the name of the clip, and the length of the clip (in beats). The length of the group will be the length of the longest clip. All other clips will loop while the longest one plays. If the clips do not align (e.g. if the group is 6-beats, a 4-beat clip will have 2 beats left over), then the clip will “wrap around.” [3] [4]

Audio clips always route to the sampler/looper, which resolves to an Audio Out (L&R) and possibly per-sample outputs.

XXX TO-DO: How do we route sequences?? One way would be to define it in the sequence. But then the sequence clips are not portable when used in another context. Another way would be to define each row as sort of a MIDI Bus. But this puts us almost into a multi-track paradigm.

To edit a clip, drag the clip onto the edit icon. This will take you to the Sequence Editor (see the section called “Sequence Editor”).

To convert a group into a sequence, drag the group's heading to the Sequence Editor. When you return to the matrix view, you will see the a single clip at the top for the group. [5] If this was a mistake, though... you can un-do.

To save a clip, drag it to the library. You'll be prompted for how to store it (e.g. for saving with a new name).

To remove a clip, drag it to the trash bin. You will not be prompted with “are you sure you don't want to save your work?” If you really need it back, un-do or save the session.

The purpose of the sequence editor is to program note sequences for drum patterns, musical notes, etc. The sequence will become a single clip.

Sequences in Composite can contain other sequences. However, sequences must not contain themselves — neither directly nor in a sub-sequence. Sequences can also include audio samples and audio loops.

The top portion of the window shows all the subsequences and audio clips used in this sequence. It also shows their relative position in a time-line. The bottom portion has a grid-like pattern editor with a piano roll (or drum kit name list) on the left.

If you wish to edit a subsequence, drag it down into the editor window and start editing the beats. Edits will be effective for every like-named sequence. So if your sequence is called “Pattern 1”, then your edits will update all the patterns that are called “Pattern 1.”

Since sequences can contain subsequences (which can also contain subsequences), the user is able to keep track of where they are through a breadcrums-like widget between the sequence overview (top) and the pattern editor

To add samples, loops, and existing sequences from your library, simply drag them from the library to the top portion of the screen where you want them to sound.

However, if you want to add a sample and sequence it like a drum, drag the sample onto the piano roll. Now you can program it with the matrix editor.

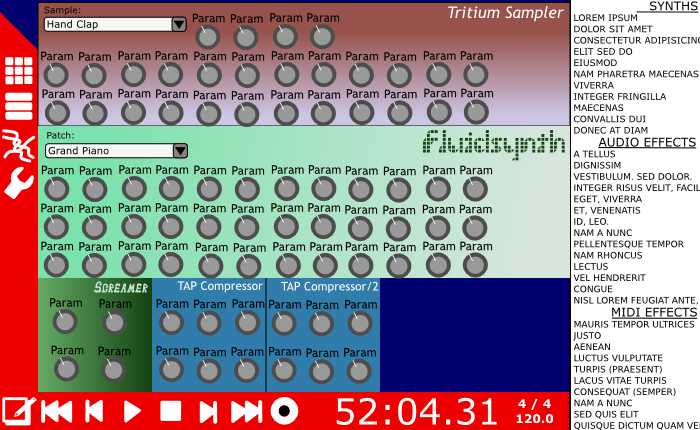

Audio plugins (whether they are synths or effects or whatever) are collected on the instrument rack and ordered however the user wants them. The user will be presented with the parameters for each plugin. Composite will generate this UI.

There will also be a method (to be determined) to launch the plugin's own GUI — if it has one. The plugin's GUI will be launched in its own window. [6]

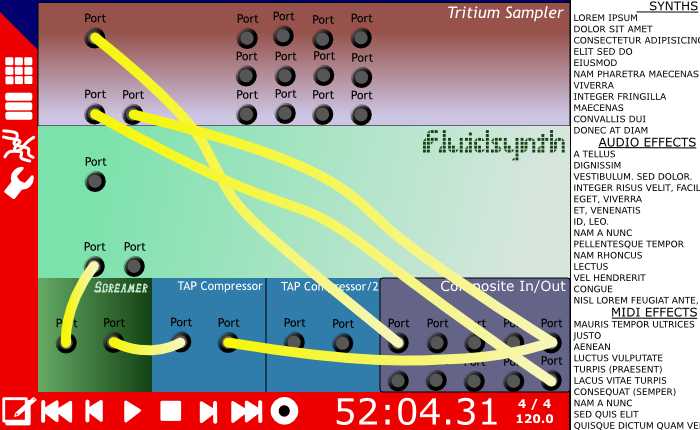

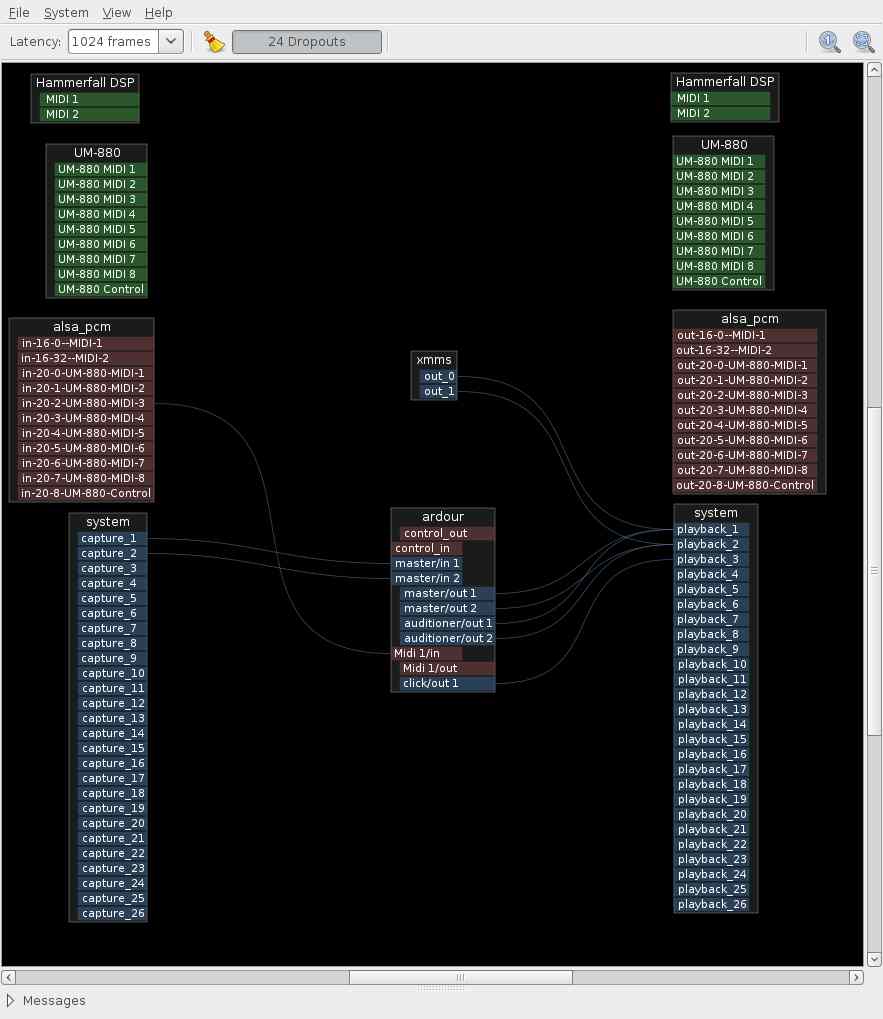

To edit connections and patches, switch to the rear view of the rack. (XXX TO-DO: How??) Here, input and output ports are shown like jacks. To route patches, draw lines like a patch cable between in's and out's. [7] Alternatively, we may supply a UI where each instrument is a box that you wire up (like Alsa Modular Synth or Patchage (see Figure 7).

The library at the right shows all the plugins that are available on the system. To add one to the rack, simply drag it onto the rack. To remove one from the rack, drag it to the trash bin.

The main ins and outs of Composite will always appear as a persistent rack space (either at the top or the bottom). This allows you to explicitly control what goes to the main outs of Composite

As discussed before, handling patches might be done in a manner similar to Alsa Modular Synth or Patchage:

The audio engine for Composite will be a stand-alone (C++) library (Tritium) that can be embedded into other applications. It will have both an LV2 and a DSSI front-end. When used with Composite it will be used through the LV2 interface. This means that the same functionality available to Composite will be available to any other LV2 host.

Disclaimer

This interface definition is being written before I've ever written an LV2 plugin. So, it labels out conceptually the interface that is needed. Details will surely change.

First, the sample will be a “dumb” audio processing device. It shall have no notion of ticks and beats or anything that has to do with sequencing. It will only know frame offsets. It must be driven by a sequencer.

The sampler shall supply the following input ports:

MIDI In, similar to Event In... but less capable.

Possibly: Audio In, for loading samples directly into the sampler from a PCM stream.

The sampler shall supply the following output ports:

Midi Out, for notification of errors and status.

Main Audio Out L

Main Audio Out R

Optionally, one audio out for each sample/instrument loaded into the sampler.

XXX TO-DO: Write a MIDI implementation.

XXX TO-DO!

State the method(s) to be used to demonstrate that a given body of software is a valid copy of this specification.

The following people have been very helpful in the formulation of this specification, and I could not have done it without them.

The Hydrogen Dev Team — Especially Sebastian Moors and Michael Wolkstein. You guys have been very encouraging in this process.

Patrick Shirkey — For stirring up interest, sharing ideas, and helping with them. This project wouldn't be happening if not for Patrick.

Nathan Lunt — Feedback, ideas, encouragement, spell check.

James Lovell — Sharing excellent insight into jazz improvisation and how Composite could be useful in that arena.

Thorsten Wilms — many good suggestions, including the use of breadcrumbs for the Sequence Editor.

Trademarks used in this document are the property of their respective owners. This project is not associated nor endorsed by the trademark owners. Trademarks used include, but are not limited to:

Live, Ableton Live, and Ableton are trademarks of Ableton AG.

Reason is a trademark of Propellerhead Software, AB.

The iPhone is a trademark of Apple, Inc.

The following content was also used:

Figure 7 is ©2009 by David Robillard. Acquired from http://drobilla.net/software/patchage/.

A

- ADSR

A type of envelope generator that allows you to control the Attack, Decay, Sustain, and Release parameters. Generally, the parameters are proportional to the velocity.

Read more about this in the Wikipedia Article ADSR Envelope

See Also Envelope Generator, Attack, Decay, Sustain, Release.

- Attack

This is the first phase of an ADSR envelope, and is the amount of time to turn the parameter up from 0 to full velocity after triggering the note.

See Also ADSR.

- Attenuation

In filters and mixers, this the amount that a signal is reduced (volume).

See Also Roll-off.

B

- Band-Pass Filter

A filter that preserves a certain band of frequencies, and attenuates (silences) all others. This is often done by combining a high-pass and a low-pass filter.

See Also Filter, High-Pass Filter, Low-Pass Filter.

- Beat Slicing

Dividing up an audio loop into smaller samples so that the samples can be played back (sequenced) at a different tempo.

For example, suppose you have a recording of a drummer playing for two measures. However, you want to play it back at a slightly higher tempo. By dividing up the recording (usually where the drum hits occur) and playing back the chunks with a sequencer, it effectively changes the tempo without degrading sound quality.

C

- Clip

A sequence, loop, sample, or combination of all three.

- Clipping

A phenomenon that happens to a signal when the signal is too large for whatever is receiving it. The peaks of the signal (which are normally smooth curves) get cut off straight at the max volume (clipped). This distorts the sound and is usually undesirable.

An example of clipping is when you play music louder than your speaker can handle. Parts of the music sound harsh and fuzzy.

- Comping

Accompanying a soloist, usually playing chords. In improvisation, comping is also a skill of improv, with the comping musician making chord substitutions based on the style and melody that the soloist is playing.

- Cutoff Frequency

On high-pass and low-pass filters, this is the frequency that divides between those that pass, and those that are attenuated (silenced). In a high-pass resonance filter, or a low-pass resonance filter, the cutoff is also the frequency zone that gets boosted.

For example, if you have a low-pass filter and you set the cutoff frequency high (i.e. 20kHz)... the filter will not affect the sound. All the audible frequencies will pass through undisturbed. As you lower the cutoff frequency to something like 40 Hz (the low string on a bass guitar), it sounds like someone is putting a blanket over the speaker. The higher frequencies are being attenuated above 30 Hz.

See Also Filter, High-Pass Filter, Low-Pass Filter, Resonance Filter.

D

- Decay

After reaching full velocity from the attack, this is the amount of time to turn the parameter down from full velocity to the sustain level.

See Also ADSR.

E

- Envelope Generator

A way to control (change) a parameter over time as a response to triggering, holding, and releasing a note.

Did your eyes just glaze over? Let's try again:

Imagine that you're playing a note on the keyboard and you have your other hand on a knob (volume, filter cutoff, etc.). As you play the note, you twist the knob (often up, then down... or down, then up). You do the same thing on each note. That's what an envelope generator does. See also ADSR

F

- Fader

A slider control used to adjust the attenuation (volume) in a mixer. Faders always have an "audio" taper, which means that the attenuation amount changes on an exponential scale.

- Filter

A device that changes a sound by attenuating specific frequencies. A tone knob is an example of a simple, low-pass filter.

See Also Band-Pass Filter, High-Pass Filter, Low-Pass Filter, Resonance Filter.

G

H

- High-Pass Filter

A filter that attenuates (silences) low frequencies, but allows high frequencies to pass through.

See Also Filter, Cutoff Frequency.

L

- Low-Pass Filter

A filter that attenuates (silences) high frequencies, but allows low frequencies to pass through.

See Also Filter, Cutoff Frequency.

M

- Mute

To make no noise. A setting on an instrument that prevents any audio output.

- Mute Group

A group of instruments (samples) that should mute (stop playing) immediately after another instrument in the group is triggered.

This is typically used in hi-hats, where there's a different instrument (sample) for when the hi-hat is open or closed. With a real hi-hat, the sound of the open hi-hat will stop as soon as you close it. However, if you use two samples — the open sound will continue even after you have triggered the closed sound. By placing both instruments in the same mute group (group #1, for example)... triggering closed sound will immediately stop the open sound (and vice versa).

O

- Octave

A span of frequencies where the top-most frequency is exactly twice the frequency of the bottom frequency.

For example, the range 20 Hz to 40 Hz is an octave. So is 120 Hz to 240 Hz, and 575 Hz to 1150 Hz. While the frequency differences are very different (20 Hz, 120 Hz, and 575 Hz, respectively), to the human ear they sound like the same distance.

R

- Release

After the note is released, this is the amount of time to reduce the parameter from the sustain level to 0.

See Also ADSR.

- Resonance

When referring to a resonance filter, this is the parameter that determines how much of a boost (gain) to give the frequencies at the cutoff.

See Also Resonance Filter.

- Resonance Filter

A filter that gives a large boost to a very narrow range of frequencies. Typically it will be part of a high-pass or a low-pass filter, where the boosted frequencies are centered on the cut-off frequency.

See Also Filter, Cutoff Frequency, Resonance.

- Roll-off

This is the amount that frequencies are attenuated (suppressed) as the frequency changes (typically measured in dB/octave).

For example, in a low-pass filter the frequencies below the cutoff frequency are not attenuated (they pass-through with the same volume). Same with the cutoff frequency. As you go above the cutoff frequency, the frequencies that are near the cutoff frequency are not attenuated very much at all. However, the frequencies that are much higher than the cutoff are attenuated (suppressed) a lot. This is usually approximated by a straight line (on a log scale) and measured in in dB of attenuation per octave of frequency.

See Also Attenuation, Filter.

- Rubber Band

See Time Stretch.

S

- Sample

A short audio recording of a sound, typically between .1 and 3.0 seconds long.

Note that a sample can also refer to a single, discrete, measurement (e.g. of an audio signal). It is not used in this way for this document.

- Sustain

The level to hold the parameter after finishing the decay time. This level will be maintained until the not is released.

See Also ADSR.

T

[1] If I wet my middle finger and press it hard on a sheet of paper, the circle is 0.50-in. in diameter (finger at about 45°). When my fingernails are short and I use the tip of my finger, the circle is 0.38-in (finger at about 90°). With a 120-PPI display, this is 60px and 45px.

To keep from scaling graphics too much, it might be best to define several standard PPI's and provide art for those (e.g. 72, 85, 96, 100, 120, 150).

[2] If someone has fat fingers and sharp eyes, they won't be happy with a one-size-fits-all PPI setting. Conversely, if someone has narrow fingers but dull eyes, they won't be able to find a good PPI setting, either. However, at the moment I think dividing these will add too much complexity. Having the mechanism to start with allows it to be refactored in future versions.

[3] This is what I mean by “wrap around”:

Clip 1 Beats: 1 2 3 4 5 6 1 2 3 4 5 6

Clip 2 Beats: 1 2 3 4 1 2 3 4 1 2 3 4

Where as, this would be “truncated”:

Clip 1 Beats: 1 2 3 4 5 6 1 2 3 4 5 6

Clip 2 Beats: 1 2 3 4 1 2 1 2 3 4 1 2

[4] Should this be something that the user can configure?

[5] When we go to the Sequence Editor, the clips and whatnot will be arranged as they would have been played by the group. However, after editing, would it be possible to detect that a sequence is group compatible and not convert it all to a single clip?

[6] Unfortunately, the state of the art does not allow us to embed the plugin GUI's into our layout/widgets. Maybe one day in the future.

[7] Don't be too sexy with this until we've tackled all the bigger issues.